Verification as Velocity: The Missing Step Before Automation

AI systems optimize fastest where success can be verified automatically. Before asking AI to solve something, ask if you can programmatically verify the solution.

Andrej Karpathy recently pointed out something obvious in hindsight: verifiability is the single most predictive feature for AI optimization. Systems improve fastest when they can automatically validate whether they succeeded.

This isn't revolutionary. It's how we've always learned quickly—tight feedback loops, clear success criteria, rapid iteration. But watching AI systems plateau where verification ends makes the pattern clearer: the bottleneck isn't the AI's capability, it's our ability to define what "correct" looks like.

Coding assistants excel because tests exist. Game-playing AI optimizes because win conditions are explicit. Creative work plateaus because "good" requires human judgment. The gap isn't about intelligence—it's about measurement.

The Verification Question

Before delegating work to AI, ask: Can I programmatically verify success?

If yes, you've unlocked rapid optimization. Build the verification first, then iterate fast.

If no, you have three options:

- Make it verifiable — Define explicit success criteria, build automated checks, create test cases

- Accept manual review — Know that every iteration requires human evaluation, plan accordingly

- Position the work differently — Break tasks into verifiable components, automate those parts

Most work falls somewhere in the middle. You can verify parts of it. The method is to identify which parts and optimize those first.

Verification as Presence Practice

Making work verifiable is a coherence practice. It requires pausing before automating to clarify: What does success actually mean here?

This is Presence as Foundation applied to tooling. You can't automate what you can't define. The act of creating verification criteria forces explicit thinking about goals, edge cases, and acceptable tradeoffs. It converts vague intentions into measurable outcomes.

It also reveals where your understanding is fuzzy. If you can't write a test for it, you probably don't know what "correct" looks like yet.

Positioning for Verification

Alignment over Force shows up here too. Instead of forcing AI into unverifiable domains and accepting slow iteration, position work where verification already exists or can be built.

Practical examples:

- Writing: Can't verify "good prose" automatically, but can verify "no broken links," "consistent terminology," "follows house style," "meets length requirements"

- Design: Can't verify "aesthetically pleasing," but can verify "contrast ratios meet accessibility standards," "all required assets present," "exports at correct dimensions"

- Strategy: Can't verify "smart decision," but can verify "decision uses current data," "follows documented framework," "stakeholders signed off"

The pattern: automate the verifiable parts, reserve human judgment for what remains.

Build Verification First

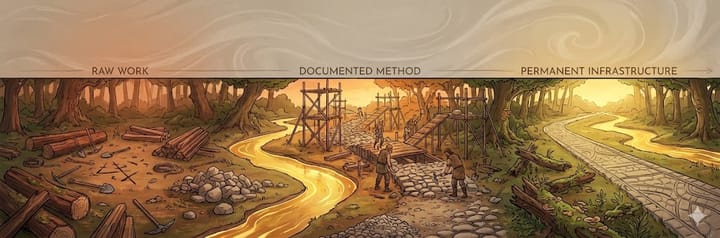

Here's the reusable method:

- Define success explicitly — What does "correct" look like? Write it down.

- Identify verifiable components — Which parts can be checked automatically?

- Build verification tools — Create tests, validators, automated checks

- Iterate with AI — Now rapid optimization is possible for verified parts

- Review manually — Apply human judgment to what verification can't cover

This inverts the usual approach. Most people delegate to AI first, then struggle with vague feedback. Building verification first creates the infrastructure for rapid improvement.

The Limit Case

Some work resists verification entirely. Nuanced judgment, creative exploration, strategic intuition—these don't reduce to pass/fail tests. That's fine. Knowing where verification ends helps you allocate effort appropriately.

Use AI for rapid iteration in verifiable domains. Use human judgment where verification doesn't exist. Don't waste cycles trying to force one approach into the other's territory.

The question isn't "Can AI do this?" It's "Can I verify this?" Answer that first, and the rest follows.

Takeaway

Verifiability unlocks optimization velocity. Before automating anything, build the infrastructure to know whether it worked. That infrastructure—tests, checks, explicit criteria—becomes a permanent capability.

Can someone reuse this tomorrow? Yes. Define success explicitly, build verification for what you can measure, iterate fast there, and reserve judgment for what resists automation.

Build the feedback loop first. Speed follows.