Our institutions are optimized for emergencies that announce themselves—the earthquake, the attack, the market crash. Events that cross a threshold, trigger detection, demand response. The UN Security Council convenes. States of emergency get declared.

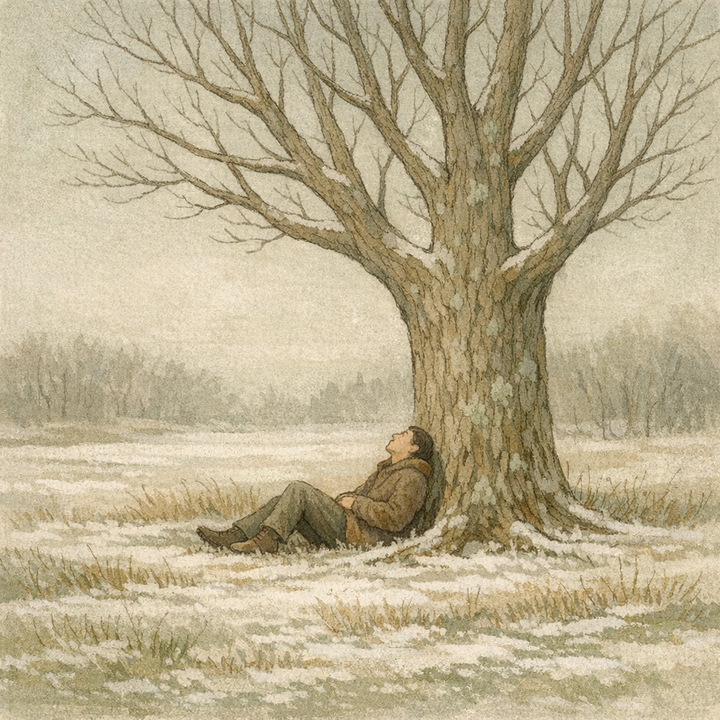

But the dominant disaster form of our era never crosses that threshold. Each day is